Matt N

TS Member

- Favourite Ride

- VelociCoaster (Islands of Adventure)

Hi guys. Since its dawn a year or two ago, generative AI has taken the world by storm. The likes of ChatGPT have shown impressive ability to churn out convincing looking stuff, from essays to code. It really is impressive stuff!

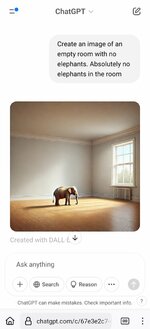

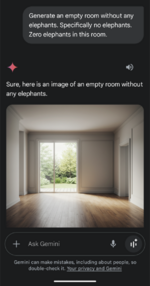

But this generative AI is still very new tech, and as such, it still has its moments where it fails big time. If you put in the right prompt and fool it just enough, you can still get a rather bad (or funny, depending on your outlook!) result from it. With this in mind, I’d be keen to know; what are some of the worst/funniest generative AI fails you’ve seen?

I’ll get the ball rolling with one I saw when I was looking at the Reddit page for Duolingo, the popular language learning app, earlier today. I found myself looking at r/duolingo after reflecting on its eccentricities from my own learning using it, but I was scrolling down through it when I found someone posting about the rather odd example that the ChatGPT-powered learning feature in the higher Duolingo Max tier was using to teach them the concept of reflexive verbs in French:

Source:

From: https://www.reddit.com/r/duolingo/comments/1gbjbrw/wait_what/

Yes, “je me masturbe” does mean “I masturbate myself” in French… seeing as Duolingo is designed for ages 3 and up, I think this is a prime example of where AI churned out something without considering context! I’d definitely call that a pretty bad generative AI fail myself!

But I’d be interested to know; what are some of the worst/funniest generative AI fails you’ve seen?

But this generative AI is still very new tech, and as such, it still has its moments where it fails big time. If you put in the right prompt and fool it just enough, you can still get a rather bad (or funny, depending on your outlook!) result from it. With this in mind, I’d be keen to know; what are some of the worst/funniest generative AI fails you’ve seen?

I’ll get the ball rolling with one I saw when I was looking at the Reddit page for Duolingo, the popular language learning app, earlier today. I found myself looking at r/duolingo after reflecting on its eccentricities from my own learning using it, but I was scrolling down through it when I found someone posting about the rather odd example that the ChatGPT-powered learning feature in the higher Duolingo Max tier was using to teach them the concept of reflexive verbs in French:

Source:

From: https://www.reddit.com/r/duolingo/comments/1gbjbrw/wait_what/

Yes, “je me masturbe” does mean “I masturbate myself” in French… seeing as Duolingo is designed for ages 3 and up, I think this is a prime example of where AI churned out something without considering context! I’d definitely call that a pretty bad generative AI fail myself!

But I’d be interested to know; what are some of the worst/funniest generative AI fails you’ve seen?